Measuring the Intensity of the World’s Most Powerful Lasers

Measuring the Intensity of the World’s Most Powerful Lasers

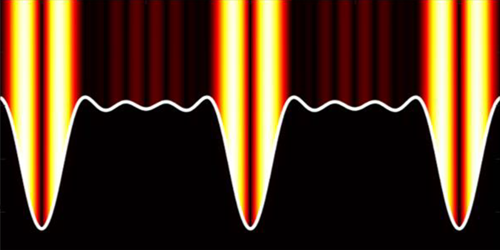

Scientists are building extremely powerful lasers. For around a trillionth of a second, one of these machines will emit thousands of times the power of the US electric grid. Such devices could allow researchers to explore unsolved problems related to fundamental physics principles and to develop innovative laser-based technologies. But these applications require precise knowledge of the intensity of any such laser, a parameter that is difficult to measure, as no known material can withstand the anticipated extreme conditions of the laser beams. Now Wendell Hill at the University of Maryland, College Park, and his colleagues demonstrate a technique that uses electrons to determine this intensity [1].

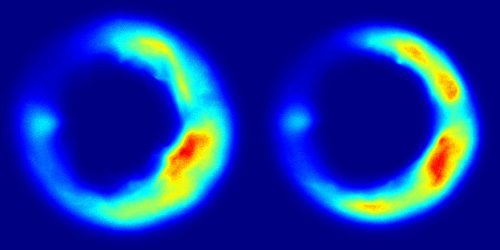

For their demonstration, the researchers fired a high-power laser pulse at a low-density gas, causing the gas to release electrons. The laser’s electromagnetic field then propelled these electrons forward and out of the laser beam. The team observed the angular distribution of the ejected electrons in real time using surfaces called image plates that act like photographic film.

Analyzing these image plates, Hill and his colleagues find that the angle of the emitted electrons relative to the beam’s direction is inversely proportional to a laser’s intensity, allowing this angle to serve as an intensity measure. The researchers demonstrate their method for laser intensities of 1019–1020 W/cm2 and suggest that it could be applied to intensities in the range of 1018–1021 W/cm2. They say that the approach could help scientists in field testing the next generation of ultrapowerful lasers and in studying, with high precision, the interaction between matter and strong electromagnetic fields.

–Ryan Wilkinson

Ryan Wilkinson is a Corresponding Editor for Physics Magazine based in Durham, UK.

References

- S. Ravichandran et al., “Imaging electron angular distributions to assess a full-power petawatt-class laser focus,” Phys. Rev. A 108, 053101 (2023).

Journal Club: Experiments & Phenomenology / 30 Oct 2023, 11:00 Tehran Time

| School of Particles and Accelerators Journal Club: Experiments & Phenomenology / Virtual format |

| Date: Monday, Oct 30, 2023 / دوشنبه، 8 آبان 1402 Time: 11:00 AM - 12:00 PM (Tehran time) |

| Speaker: Ms. Hedieh Pouyanrad Affiliation: Amirkabir University of Technology |

| Title: Laser-IORT: a laser-driven source of relativistic electrons suitable for Intra-Operative Radiation Therapy of tumors Title in Persian: لیزر- رادیوتراپی حین جراحی: استفاده از باریکه لیزر به عنوان چشمه باریکه الکترون مناسب برای رادیوتراپی حین عمل جراحی Abstract: In a recent experiment a high efficiency regime of stable electron acceleration to kinetic energies ranging from 10 to 40 MeV has been achieved. The main parameters of the electron bunches are comparable with those of bunches provided by commercial Radio-Frequency based Linacs currently used in Hospitals for Intraoperative Radiation Therapy(IORT). IORT is an emerging technique applied in operating theaters during the surgical treatment of tumors. Performances and structure of a potential laser-driven Hospital accelerator are compared in detail with the ones of several commercial devices. A number of possible advantages of the laser based technique are also discussed. Based on: https://doi.org/10.1063/1. |

Link to Join Virtually: https://meet.google.com/fnv- |

Oscillator Ising Machines, A Framework Of Analog Computing Technology: A Crash Course

IOMP Webinar: Radiopharmaceutical Therapy (RPT)

IOMP Webinar: Radiopharmaceutical Therapy (RPT)

Tuesday, 25th October 2023 at 12 pm GMT; Duration 1 hour

To check the corresponding time in your country please check this link:

https://greenwichmeantime.com/

Organizer: M Mahesh

Moderator: M Mahesh

Speakers: George Sgouros and Ana Kiess

Topic 1: Imaging and Dosimetry in Radiopharmaceutical therapy

George Sgouros, PhD

Dr. Sgouros is Professor and Director of the Radiological Physics Division in the Department of Radiology at Johns Hopkins University, School of Medicine. He received his PhD from Cornell University, Biophysics Dept, completed his post-doc at Memorial Hospital Medical Physics Dept. He is author on more than 200 peer-reviewed articles, several book chapters and review articles. He is recipient of the SNMMI Saul Hertz Award for outstanding achievements and contributions in radionuclide therapy and a fellow of the American Association of Physicists in Medicine (AAPM). He is a member of the Medical Internal Radionuclide Dose (MIRD) Committee of the Society of Nuclear Medicine and Molecular Imaging (SNMMI), which he chaired 2008-2019. He has chaired a Dosimetry & Radiobiology Panel at a DOE alpha-emitters workshop and also an ICRU report committee for ICRU guidance document No. 96. Dr. Sgouros is a former chair (2015-2017) of the NIH study section on Radiation Therapeutics and Biology (RTB). Dr. Sgouros is also founder and principal of Rapid, a dosimetry and imaging services and software products start-up in support of radiopharmaceutical therapy.

Abstract

Even after they have made it to Phase I clinical trial investigation, 97% of new cancer drugs fail. The majority of these drugs are chosen based on their ability to inhibit cell signaling pathways responsible for maintaining a cancer phenotype. Although this approach has led to dramatic improvements in treatment efficacy for certain cancers, this approach to cancer therapy is more complex than initially appreciated. Radiopharmaceutical therapy (RPT) involves the targeted delivery of radiation to tumor cells or to the tumor microenvironment. Since the radionuclides used in RPT also emit photons, nuclear medicine imaging may be used to measure the pharmacokinetics of the therapeutic agent and estimate tumor and normal organ absorbed doses in individual patients to implement an individualized (precision medicine) treatment planning approach to RPT delivery. This unique feature of RPT, along with its ability to delivery highly potent alpha-particle radiation to targeted cells, is at the heart of what distinguishes RPT compared to other cancer treatments for widespread metastases.

Learning Objectives:

- Understand the mechanism of Radiopharmaceutical therapy.

- Compare and contrast RPT with other cancer therapy modalities.

- Understand the distinction between RPT and Theranostics.

Topic 2: Clinical Radiopharmaceutical Therapy, Dose-Response and Future Directions

Ana Kiess, MD, PhD

Dr. Ana Kiess’s clinical focus is on the treatment of prostate cancer and head and neck cancers with radiopharmaceutical therapies and external beam radiotherapy. Her research concentrates on the integration of dosimetry, dose-response analyses, and new radiopharmaceutical therapies into the clinic.

Education:

MD; Duke University School of Medicine (2008)

PhD; Biomedical Engineering; Duke University (2008)

Residency:

Radiation Oncology; Memorial Sloan-Kettering Cancer Center (2013)

Recent Publications:

- Wang J, Kiess AP. PSMA-targeted therapy for non-prostate cancers. Front Oncol. 2023 Aug. 13:1220586. doi: 10.3389/fonc.2023.1220586.

- Kiess AP, Hobbs RF, Bednarz B, Knox SJ, Meredith R, Escorcia FE. ASTRO’s framework for radiopharmaceutical therapy curriculum development for trainees. Int J Radiat Oncol Biol Phys. 2022; 113(4):719-726.

- Jia AY, Kashani R, Zaorsky NG, Baumann BC, Michalski J, Zoberi JE, Kiess AP, Spratt DE. Lutetium-177 Prostate-Specific Membrane Antigen Therapy: A Practical Review. Pract Radiat Oncol. 2022; 12(4): 294-299.

- Jia AY, Kiess AP, Li Q, Antonarakis ES. Radiotheranostics in advanced prostate cancer: Current and future directions. Prostate Cancer and Prostatic Diseases. 2023, April: 1-11.

Abstract

Radiopharmaceuticals are rapidly expanding in clinical use and development for prostate cancer and many other tumor types. As in other radiation therapies, there is a dose-response relationship for both tumor and normal tissues, with increasing responses or toxicities at higher absorbed doses. In this webinar, we will discuss these concepts in relation to currently approved radiopharmaceutical therapies (RPTs) and future directions of RPTs. We will also review clinical indications and practical use of currently approved RPTs including [177Lu] Lu-PSMA-617, [177Lu] Lu-DOTATATE, and [223Ra] RaCl2.

Learning Objectives:

- Understand clinical indications and practical use of currently approved radiopharmaceutical therapies

- Discuss concepts of radiation dose and response of tumors and normal organ toxicities

- Explore future directions of clinical radiopharmaceutical therapies.

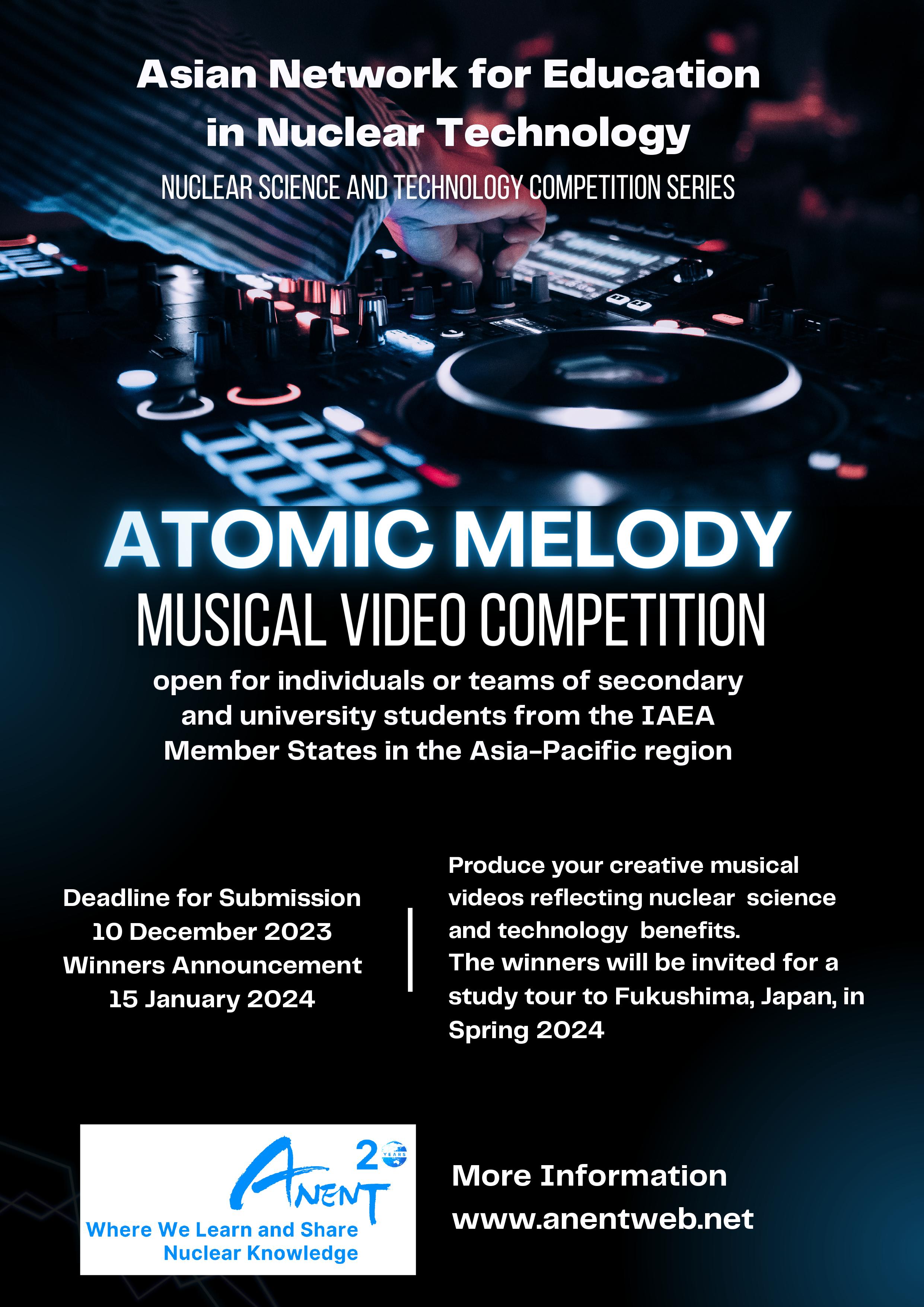

Atomic Melody Musical Video Competition

Introduction

Nuclear science and technology are an integral part of many aspects of modern life, including health, agriculture, industry, and energy production. To deepen the understanding and to increase the interest of the younger generation in nuclear science and technology in a creative and fun way, ANENT will hold a music video competition titled "Atomic Melody - Nuclear Science and Technology Musical Video Competition" as a part of the ANENT 20th anniversary activities. The title "Atomic Melody" is proposed as a combination of two key elements of the competition: nuclear science and technology, and a music video as well. The word "atomic" refers to the scientific and technical aspects, while "melody" refers to the musical and video aspects. This competition is being organized within the framework of IAEA TC Project RAS/0/091 "Supporting Nuclear Science and Technology Education at the Secondary and Tertiary Level". Students from all backgrounds are invited to participate in this competition.

Purpose

The purpose of the competition is to give young people the opportunity to produce creative works in the form of musical videos whose content reflects their knowledge of nuclear science and technology. Music videos must be original and can creatively and accurately convey information about nuclear science and technology benefits.

Categories

The competition has two categories as follows:

- Secondary School Student.

- University Student.

Eligibility

- Individuals, or teams of 2 students from the IAEA Member States in the Asia-Pacific region.

- Secondary School Students, the age 17 at the end of April 2024 and with an endorsement letter from the school.

- Undergraduate University Students with the maximum age of 25 years.

Participation Fee

There is no fee to participate in this competition.

Participation Criteria:

- Each individual or team can only submit one musical video.

- The duration of the musical video is maximum 3 minutes.

- Any kind of music genre is acceptable.

- The video should be uploaded onto YouTube with the hashtag #ANENT20Anniversary.

Schedule

- Announcement: 27 September 2023

- Deadline for Submission: 27 November 2023

- Videos of 15 Finalists per category will be uploaded on the ANENT Youtube Channel for public review to assess the overall impact based on the numbers of likes received: 10 December 2023 to 10 January 2024.

- Winners Announcement: 15 January 2024.

- Study visit to Fukushima, Japan in Spring 2024.

Prize

- Five (5) winners will be identified for each category.

- The winners with the accompanying teacher will be provided the opportunity to go to Fukushima, Japan for a study tour.

- All participants will be given a certificate of participation while winners will be provided a certificate of achievement.

Breakthrough Prize for Quantum Field Theorists

Breakthrough Prize for Quantum Field Theorists

• Physics 16, 165The 2024 Breakthrough Prize in Fundamental Physics goes to John Cardy and Alexander Zamolodchikov for their work in applying field theory to diverse problems.

peterschreiber.media/stock.adobe.com

Many physicists hear the words “quantum field theory,” and their thoughts turn to electrons, quarks, and Higgs bosons. In fact, the mathematics of quantum fields has been used extensively in other domains outside of particle physics for the past 40 years. The 2024 Breakthrough Prize in Fundamental Physics has been awarded to two theorists who were instrumental in repurposing quantum field theory for condensed-matter, statistical physics, and gravitational studies.

“I really want to stress that quantum field theory is not the preserve of particle physics,” says John Cardy, a professor emeritus from the University of Oxford. He shares the Breakthrough Prize with Alexander Zamolodchikov from Stony Brook University, New York.

The Breakthrough Prize is perhaps the “blingiest” of science awards, with $3 million being given for each of the five main awards (three for life sciences, one for physics, and one for mathematics). Additional awards are given to early-career scientists. The founding sponsors of the Breakthrough Prize are entrepreneurs Sergey Brin, Priscilla Chan and Mark Zuckerberg, Julia and Yuri Milner, and Anne Wojcicki.

The fundamental physics prize going to Cardy and Zamolodchikov is “for profound contributions to statistical physics and quantum field theory, with diverse and far-reaching applications in different branches of physics and mathematics.” When notified about the award, Zamolodchikov expressed astonishment. “I never thought to find myself in this distinguished company,” he says. He was educated as a nuclear engineer in the former Soviet Union but became interested in particle physics. “I had to clarify for myself the basics.” The basics was quantum field theory, which describes the behaviors of elementary particles with equations that are often very difficult to solve. In the early 1980s, Zamolodchikov realized that he could make more progress in a specialized corner of mathematics called two-dimensional conformal field theory (2D CFT). “I was lucky to stumble on this interesting situation where I could find exact solutions,” Zamolodchikov says.

CFT describes “scale-invariant” mappings from one space to another. “If you take a part of the system and blow it up by the right factor, then that part looks like the whole in a statistical sense,” explains Cardy. More precisely, conformal mappings preserve the angles between lines as the lines stretch or contract in length. In certain situations, quantum fields obey this conformal symmetry. Zamolodchikov’s realization was that solving problems in CFT—especially in 2D where the mathematics is easiest—gives a starting point for studying generic quantum fields, Cardy says.

Cardy started out as a particle physicist, but he became interested in applying quantum fields to the world beyond elementary particles. When he heard about the work of Zamolodchikov and other scientists in the Soviet Union, he immediately saw the potential and versatility of 2D CFT. One of the first places he applied this mathematics was in phase transitions, which arise when, for example, the atomic spins of a material suddenly align to form a ferromagnet. Within the 2D CFT framework, Cardy showed that you could perform computations on small systems—with just ten spins, for example—and extract information that pertains to an infinitely large system. In particular, he was able to calculate the critical exponents that describe the behavior of various phase transitions.

Cardy found other uses of 2D CFT in, for example, percolation theory and quantum spin chains. “I would hope people consider my contributions as being quite broad, because that’s what I tried to be over the years,” he says. Zamolodchikov also explored the application of quantum field theory in diverse topics, such as critical phenomena and fluid turbulence. “I tried to develop it in many respects,” he says. The two theorists never collaborated, but they both confess to admiring the other’s work. “We’ve written papers on very similar things,” Cardy says. “I would say that we have a friendly rivalry.” He remembers first encountering Zamolodchikov in 1986 at a conference organized in Sweden as a “neutral” meeting point for Western and Soviet physicists. “It was wonderful to meet him and his colleagues for the first time,” Cardy says.

“Zamolodchikov and Cardy are the oracles of two dimensions,” says Pedro Vieira, a quantum field theorist from the Perimeter Institute in Canada. He says that one of the things that Zamolodchikov showed was the infinite number of symmetries that can exist in 2D CFT. Cardy was especially insightful in how to apply the mathematical insights of 2D to other dimensions. Vieira says of the pair, “They understood the power of 2D physics, in that it is very simple and elegant, and at the same time mathematically rich and complex.”

Vieira says that the work of Zamolodchikov and Cardy continues to be important for a wide range of researchers, including condensed-matter physicists who study 2D surfaces and string theorists who model the motion of 1D strings moving in time. One topic attracting a lot of attention these days is the so-called AdS/CFT correspondence, which connects CFT mathematics with gravitational theory (see Viewpoint: Are Black Holes Really Two Dimensional?). Cardy says that there’s also been a great deal of recent work on CFT in dimensions more than two. “I’m sure that [higher-dimensional CFT] will win lots of awards in the future,” he says. Zamolodchikov continues to work on extensions of quantum field theory, such as the “ ��¯ deformation,” that may provide insights into fundamental physics, just as CFT has done.

Zamolodchikov and Cardy and the 18 other Breakthrough winners will receive their awards on April 13, 2024, at a red-carpet ceremony that routinely attracts celebrities from film, music, and sports. Cardy says that he is looking forward to it. “I like a good party.”

–Michael Schirber

Michael Schirber is a Corresponding Editor for Physics Magazine based in Lyon, France.

REFERENCE: https://physics.aps.org/articles/v16/165?utm_campaign=weekly&utm_medium=email&utm_source=emailalert

Quantum Ratchet Made Using an Optical Lattice

Quantum Ratchet Made Using an Optical Lattice

• Physics 16, s140Researchers have turned an optical lattice into a ratchet that moves atoms from one site to the next.

tycoon101/stock.abobe.com

A ratchet is a device that produces a net forward motion of an object from a periodic (or random) driving force. Although ratchets are common in watches and in cells (see Focus: Stalling a Molecular Motor), they are hard to make for quantum systems. Now researchers demonstrate a quantum ratchet for a collection of cold atoms trapped in an optical lattice [1]. By varying the lattice’s light fields in a time-dependent way, the researchers show that they can move the atoms coherently from one lattice site to the next without disturbing the atoms’ quantum states.

One type of ratchet (a Hamiltonian ratchet) works by providing periodic, nonlossy pushes to a gas or other multiparticle system. For particles starting in certain initial states, the pushes are timed with their motion, and the resulting movement is in a particular forward direction. For particles in other states, the pushes are out of sync, and the particles travel in chaotic trajectories with no preferred direction.

Hamiltonian ratchets have previously been demonstrated for quantum systems, but for those ratchets the particles ended up spread out in space. The ratchet designed by David Guéry-Odelin from the University of Toulouse, France, and his colleagues has tighter directional control. For the demonstration, the researchers placed 105 rubidium atoms in the periodic potential of an optical lattice. Applying specially tuned modulations to this potential, they showed that the atoms moved in discrete steps from one lattice site to the next. At the end of each step, the atoms came to rest in their ground state. This well-defined transport could have potential applications in controlling matter waves for quantum experiments, Guéry-Odelin says.

–Michael Schirber

Michael Schirber is a Corresponding Editor for Physics Magazine based in Lyon, France.

References

- N. Dupont et al., “Hamiltonian ratchet for matter-wave transport,” Phys. Rev. Lett. 131, 133401 (2023).

REFERENE: https://physics.aps.org/articles/v16/s140?utm_campaign=weekly&utm_medium=email&utm_source=emailalert

How AI and ML Will Affect Physics

How AI and ML Will Affect Physics

Sankar Das Sarma- Department of Physics, University of Maryland, College Park, College Park, MD

• Physics 16, 166

The more physicists use artificial intelligence and machine learning, the more important it becomes for them to understand why the technology works and when it fails.

J. Horsthuis

The advent of ChatGPT, Bard, and other large language models (LLM) has naturally excited everybody, including the entire physics community. There are many evolving questions for physicists about LLMs in particular and artificial intelligence (AI) in general. What do these stupendous developments in large-data technology mean for physics? How can they be incorporated in physics? What will be the role of machine learning (ML) itself in the process of physics discovery?

Before I explore the implications of those questions, I should point out there is no doubt that AI and ML will become integral parts of physics research and education. Even so, similar to the role of AI in human society, we do not know how this new and rapidly evolving technology will affect physics in the long run, just as our predecessors did not know how transistors or computers would affect physics when the technologies were being developed in the early 1950s. What we do know is that the impact of AI/ML on physics will be profound and ever evolving as the technology develops.

The impact is already being felt. Just a cursory search of Physical Review journals for “machine learning” in articles’ titles, abstracts, or both returned 1456 hits since 2015 and only 64 for the entire period from Physical Review’s debut in 1893 to 2014! The second derivative of ML usage in articles is also increasing. The same search yielded 310 Physical Review articles in 2022 with ML in the title, abstract, or both; in the first 6 months of 2023, there are already 189 such publications.

ML is already being used extensively in physics, which is unsurprising since physics deals with data that are often very large, as is the case in some high-energy physics and astrophysics experiments. In fact, physicists have been using some forms of ML for a long time, even before the term ML became popular. Neural networks—the fundamental pillars of AI—also have a long history in theoretical physics, as is apparent from the fact that the term “neural networks” appears in hundreds of Physical Review articles’ titles and abstract since its first usage in 1985 in the context of models for understanding spin glasses. The AI/ML use of neural networks is quite different from the way neural networks appear in spin glass models, but the basic idea of representing a complex system using neural networks is shared by both cases. ML and neural networks have been woven into the fabric of physics going back 40 years or more.

What has changed is the availability of very large computer clusters with huge computing power, which enable ML to be applied in a practical manner to many physical systems. For my field, condensed-matter physics, these advances mean that ML is being increasingly used to analyze large datasets involving materials properties and predictions. In these complex situations, the use of AI/ML will become a routine tool for every professional physicist, just like vector calculus, differential geometry, and group theory. Indeed, the use of AI/ML will soon become so widespread that we simply will not remember why it was ever a big deal. At that point, this opinion piece of mine will look a bit naive, much like pontifications in the 1940s about using computers for doing physics.

But what about deeper usage of AI/ML in physics beyond using it as an everyday tool? Can they help us solve deep problems of great significance? Could physicists, for example, have used AI/ML to come up with the Bardeen-Cooper-Schrieffer theory of superconductivity in 1950 if they had been available? Can AI/ML revolutionize doing theoretical physics by finding ideas and concepts such as the general theory of relativity or the Schrödinger equation? Most physicists I talk to firmly believe that this would be impossible. Mathematicians feel this way too. I do not know of any mathematician who believes that AI/ML can prove, say, Riemann’s hypothesis or Goldbach’s conjecture. I, on the other hand, am not so sure. All ideas are somehow deeply rooted in accumulated knowledge, and I am unwilling to assert that I already know what AI/ML won’t ever be able to do. After all, I remember the time when there was a widespread feeling that AI could never beat the great champions of the complex game of Go. A scholarly example is the ability of DeepMind’s AlphaFold to predict what structure a protein’s string of amino acids will adopt, a feat that was thought impossible 20 years ago.

This brings me to my final point. Doing physics using AI/ML is happening, and it will become routine soon. But what about understanding the effectiveness of AI/ML and of LLMs in particular? If we think of a LLM as a complex system that suddenly becomes extremely predictive after it has trained on a huge amount of data, the natural question for a physicist to ask is what is the nature of that shift? Is it a true dynamical phase transition that occurs at some threshold training point? Or is it just the routine consequence of interpolations among known data, which just work empirically, sometimes even when extrapolated? The latter, which is what most professional statisticians seem to believe, involves no deep principle. But the former involves what could be called the physics of AI/ML and constitutes in my mind the most important intellectual question: Why does AI/ML work and when does it fail? Is there a phase transition at some threshold where the AI/ML algorithm simply predicts everything correctly? Or is the algorithm just some huge interpolation, which works because the amount of data being interpolated is so gigantic that most questions simply fall within its regime of validity? As physicists, we should not just be passive users of AI/ML but also dig into these questions. To paraphrase a famous quote from a former US president, we should not only ask what AI/ML can do for us (a lot actually), but also what we can do for AI/ML.

About the Author

Sankar Das Sarma is the Richard E. Prange Chair in Physics and a Distinguished University Professor at the University of Maryland, College Park. He is also a fellow of the Joint Quantum Institute and the director of the Condensed Matter Theory Center, both at the University of Maryland. Das Sarma received his PhD from Brown University, Rhode Island. He has belonged to the University of Maryland physics faculty since 1980. His research interests are condensed-matter physics, quantum computation, and statistical mechanics.

Reference: https://physics.aps.org/articles/v16/166?utm_campaign=weekly&utm_medium=email&utm_source=emailalert

First Light for a Next-Generation Light Source

First Light for a Next-Generation Light Source

X-ray free-electron lasers (XFELs) first came into existence two decades ago. They have since enabled pioneering experiments that “see” both the ultrafast and the ultrasmall. Existing devices typically generate short and intense x-ray pulses at a rate of around 100 x-ray pulses per second. But one of these facilities, the Linac Coherent Light Source (LCLS) at the SLAC National Accelerator Laboratory in California, is set to eclipse this pulse rate. The LCLS Collaboration has now announced “first light” for its upgraded machine, LCLS-II. When it is fully up and running, LCLS-II is expected to fire one million pulses per second, making it the world’s most powerful x-ray laser.

The LCLS-II upgrade signifies a quantum leap in the machine’s potential for discovery, says Robert Schoenlein, the LCLS’s deputy director for science. Now, rather than “demonstration” experiments on simple, model systems, scientists will be able to explore complex, real-world systems, he adds. For example, experimenters could peer into biological systems at ambient temperatures and physiological conditions, study photochemical systems and catalysts under the conditions in which they operate, and monitor nanoscale fluctuations of the electronic and magnetic correlations thought to govern the behavior of quantum materials.

The XFEL was first proposed in 1992 to tackle the challenge of building an x-ray laser. Conventional laser schemes excite large numbers of atoms into states from which they emit light. But excited states with energies corresponding to x-ray wavelengths are too short-lived to build up a sizeable excited-state population. XFELs instead rely on electrons traveling at relativistic speed through a periodic magnetic array called an undulator. Moving in a bunch, the electrons wiggle through the undulator, emitting x-ray radiation that interacts multiple times with the bunch and becomes amplified. The result is a bright x-ray beam with laser coherence.

The first XFEL was built in Hamburg, Germany, in 2005. Today that XFEL emits “soft” x-ray radiation, which has wavelengths as short as a few nanometers. LCLS switched on four years later and expanded XFEL’s reach to the much shorter wavelengths of “hard” x rays, which are essential to atomic-resolution imaging and diffraction experiments. These and other facilities that later appeared in Japan, Italy, South Korea, Germany, and Switzerland have enabled scientists to probe catalytic reactions in real time, solve the structures of hard-to-crystallize proteins, and shed light on the role of electron–photon coupling in high-temperature superconductors. The ability to record movies of the dynamics of molecules, atoms, and even electrons also became possible because x-ray pulses can be as short as a couple of hundred attoseconds.

The upgrades to LCLS offer a new mode of XFEL operation, in which the facility delivers an almost continuous x-ray beam in the form of a megahertz pulse train. For the original LCLS, the pulse rate, which maxed out at 120 Hz, was set by the properties of the linear accelerator that produced the relativistic electrons. Built out of copper, a conventional metal, and operated at room temperatures, the accelerator had to be switched on an off 120 times per second to avoid heat-induced damage. In LCLS-II some of the copper has been replaced with niobium, which is superconducting at the operating temperature of 2 K. Bypassing the damage limitations of copper, the dissipationless superconducting elements allow an 8000-fold gain in the maximum repetition rate. The new superconducting technology is also expected to reduce “jitter” in the beam, says LCLS director Michael Dunne. Greater stability and reproducibility, higher repetition rate, and increased average power “will transform our ability to look at a whole range of systems,” he adds.

LCLS-II is a boon for time-resolved chemistry-focused experiments, says Munira Khalil, a physical chemist at the University of Washington in Seattle. Khalil, a user of LCLS, plans to take advantage of the photon bounty of the rapid-fire pulses to perform dynamical experiments. She hopes such experiments may fulfill “a chemist’s dream”: real-time observations of the coupled motion of atoms and electrons. With extra photons, scientists could also probe dilute samples, potentially shedding light on how metals bind to specific sites in proteins—a process relevant to the function of half of all of nature’s proteins.

The megahertz pulse rate also means that experiments that previously took days to perform could now be completed in hours or minutes, says Henry Chapman of the Center for Free Electron Laser Science at DESY, Germany. At LCLS and later at Hamburg’s XFEL, Chapman ran pioneering experiments to determine the structures of proteins. The method he used, called serial crystallography, involves merging the diffraction patterns of multiple samples sequentially injected into the XFEL’s beam. Serial crystallography has allowed scientists to determine the structures of biologically relevant proteins that form crystals too small to study with conventional crystallography techniques. Chapman says that the increased throughput enabled by LCLS-II will permit much more ambitious experiments, such as measurements of biomolecular reactions on timescales from femtoseconds to milliseconds. “One could also think of an ‘on the fly’ analysis that feeds back into the experiment to discover optimal conditions for drug binding or catalysis,” he says.

For Khalil, the dramatic speedup of the experiments is a key advance of LCLS-II, as she thinks it will make these kinds of experiments accessible to a wider group of people. Until now, she says, XFEL facilities were mostly used by people who had the opportunity to work extensively at XFELs as postdocs or graduate students. Many more experimenters should now be able to enter the XFEL arena, she says. “It’s an exciting time for the field.”

–Matteo Rini

Matteo Rini is the Editor of Physics Magazine.

Metallic Gratings Produce a Strong Surprise

Metallic Gratings Produce a Strong Surprise

Using a metallic grating and infrared light, researchers have uncovered a light–matter coupling regime where the local coupling strength can be 3.5 times higher than the global average for the material.

A. Rakesh et al. [1]

When light and matter interact, quasiparticles called polaritons can form. Polaritons can change a material’s chemical reactivity or its electronic properties, but the details behind how those changes occur remain unknown. A new finding by Rakesh Arul and colleagues at the University of Cambridge could help change that [1, 2]. The team has uncovered an interaction regime where the light–matter coupling strength significantly exceeds that seen in previous experiments. Arul says that the discovery could improve researchers’ understanding of how polaritons induce material changes and could allow the exploration of a wider range of these quasiparticles.

Light–matter coupling experiments are typically performed using molecules trapped in an optical cavity. Researchers have explored the coupling of such systems to visible and microwave radiation, but probing the wavelengths in between has been tricky because of difficulties in detecting polaritons created using infrared light. Midinfrared wavelengths are interesting because they correspond to the frequencies of molecular vibrations, which can control a material’s optoelectronic properties.

To study this regime, Arul and his colleagues developed a technique to shift the wavelengths of midinfrared polaritons to the visible range, where detectors can better pick them up. Rather than molecules in a cavity, they coupled light to molecules atop a metallic grating. When irradiated with a visible laser beam, polaritons generated by the infrared radiation imprinted a signal on the scattered visible light, allowing polariton detection.

Using their technique, the team found a light–matter coupling strength at specific locations that was 350% higher than the average for the whole system. Researchers have been exploring the interaction of light with metallic gratings since the time of Lord Rayleigh, Arul says. “The system is still delivering surprises.”

–Katherine Wright

Katherine Wright is the Deputy Editor of Physics Magazine.

References

- R. Arul et al., “Raman probing the local ultrastrong coupling of vibrational plasmon polaritons on metallic gratings,” Phys. Rev. Lett. 131, 126902 (2023).

- M. S. Rider et al., “Theory of strong coupling between molecules and surface plasmons on a grating,” Nanophotonics 11, 3695 (2022).

1st IPM Workshop on Accelerator Physics Group

1st IPM Workshop on Accelerator Physics Group

Institute for Research in Fundamental Sciences

October 11-12, 2023 (Mehr 19-20, 1402)

Fundamentals of Particle Accelerator Construction and Design:

The accelerator group at IPM aims to research and develop in the field of accelerator physics by studying modern accelerator projects worldwide. Our Accelerator investigations encompass different studies such as lattice design, radio-frequency, beam diagnostic, magnets, vacuum, electron sources, theoretical and computational electrodynamics, and plasma physics. In this way, our group is organizing an annual workshop in the field of both accelerator physics and technology for training inspired scientists according to international standards to domesticate this strategic field of science in the country. This year, the first meeting of this workshop series will be held in a hybrid format on October 11-12, 2023 (Mehr 19-20, 1402). This workshop aims to bring experts together in different areas of accelerator physics from different institutes to discuss the latest research in the field. The opportunity to get to know others and exchange ideas is also one of the main purposes of this workshop. The main focus of the talks in this meeting is accelerator physics and technology and its implications in not only our scientific but also our real life. This event is intended to provide opportunities for graduate students, postdocs, and researchers to expand their knowledge and broaden their research horizons.

Registration deadline: October 3, 2023 (Mehr 11, 1402).

| Webinar Link (Google Meet) |

| * Please enter your full name after entering as a guest. |

| * It should be noted that having a microphone and headphones (or speakers) is required. |

| * Please test these devices on your system in advance to ensure the accuracy of these devices |

Tracking Down the Origin of Neutrino Mass

Tracking Down the Origin of Neutrino Mass

- Department of Theoretical Physics, CERN, Geneva, Switzerland

• Physics 16, 20

Collider experiments have set new direct limits on the existence of hypothetical heavy neutrinos, helping to constrain how ordinary neutrinos get their mass.

The discovery over 20 years ago that neutrinos can oscillate from one type to another came as a surprise to particle physicists, as these oscillations require that neutrinos have mass—contrary to the standard model of particle physics. While multiple neutrino experiments continue to constrain oscillation rates and mass values (see Viewpoint: Long-Baseline Neutrino Experiments March On), one fundamental question remains unanswered: How do neutrinos get their mass? Many theoretical models exist, but so far none of them have been experimentally confirmed. Now the CMS Collaboration at CERN in Switzerland has presented results on a search for a hypothetical heavy neutrino that could be tied to neutrino mass generation [1]. No signatures of this particle were found, placing new constraints on a popular model for the origin of neutrino mass, called the seesaw mechanism. These results pave a new way to probe the neutrino mass origin at particle colliders in the future.

There are clues that neutrino masses might be special. Neutrinos have no electric charge—making them distinct from other “matter” particles, such as electrons and quarks. Neutrinos are also unique in being observed with just one type of handedness (a property that emerges from the particle mass and spin). Other matter particles can be either left-handed or right-handed and obtain their mass via their interaction with the so-called Higgs field (see Focus: Nobel Prize—Why Particles Have Mass). The fact that neutrinos are observed to be only left-handed could suggest that the Higgs mechanism doesn’t apply to them.

There is another special feature of neutrinos: they are much lighter than all the other elementary particles. Experiments constrain neutrinos to be at least a million times lighter than electrons, the next lightest particles. This disparity suggests that something is “pushing” the neutrino mass toward small values. The seesaw mechanism includes such a push [2–7]. Just like on a playground, two players are involved in this metaphorical seesaw: an observed neutrino on one end and a hypothetical neutrino on the other (Fig. 1). The quantum states of these two particles are mixed—meaning that one particle can potentially oscillate into the other. This mixing leads to an inverse relation between the masses of the two players: the heavier the hypothetical particle, the lighter the observed neutrino.

The heavy hypothetical neutrinos are “sterile” in that they do not participate in any of the known fundamental interactions. Another crucial feature of the seesaw mechanism is that neutrinos must be their own antiparticles—thus, evidence that neutrinos annihilate with themselves would provide support for this mechanism.

Researchers have for many years been searching for additional neutrinos. One probe involves observing neutrino oscillations and looking for signs of “missing” neutrinos. This scenario could occur, for example, if some of the electron neutrinos from a nuclear reactor transform into sterile neutrinos that can’t be detected. Some experiments have seen hints of sterile neutrinos (see Viewpoint: Neutrino Mystery Endures), but these possibly observed sterile neutrinos are much lighter than the predicted sterile neutrinos from the seesaw mechanism. At higher masses, global studies of neutrino data have uncovered no signs of missing neutrinos, allowing physicists to derive constraints on extra neutrinos that extend up to extremely high masses of 1015 GeV/c2.

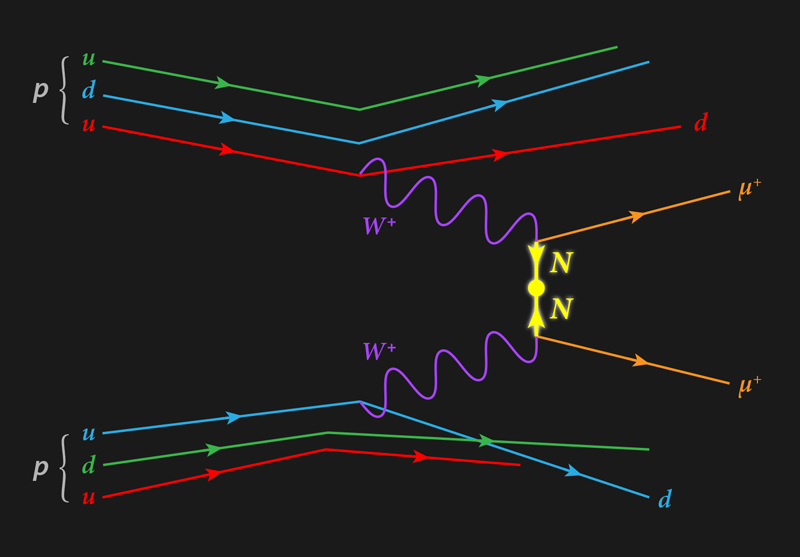

A more direct search for additional neutrinos relies on producing them in high-energy experiments. The CMS Collaboration has hunted for signs of extra neutrinos in collision data from the Large Hadron Collider (LHC) at CERN. The team’s target signal was a violation of lepton number, which is a kind of “charge” related to electrons, muons, tauons, and neutrinos (Fig. 2). So far, physicists have only seen processes that conserve lepton number (see Viewpoint: The Hunt for No Neutrinos) [8], but dedicated searches at high energy have been rare [9]. In this new study, the CMS Collaboration looked for collisions between protons (lepton number of 0) that produced either a pair of muons (lepton number of +2) or a pair of antimuons (lepton number of −2). Observing such dimuon events would imply muon neutrinos annihilating with themselves via their coupling to sterile neutrinos.

The CMS team did not find evidence of lepton number violation in the muon data, which allowed the researchers to derive new bounds on the mixing of sterile neutrinos with muon neutrinos. These bounds improve over existing limits for sterile neutrino masses above 650 GeV/c2, and they represent the strongest bounds from colliders for sterile neutrino masses up to 25 TeV/c2. (It should be noted that oscillation experiments provide stronger bounds on sterile neutrinos, but these bounds do not include lepton number violation, and thus the relation to the seesaw mechanism is not straightforward [10].)

Putting these results into the context of the theoretical neutrino mass mechanism landscape, the CMS constraints on the mixing parameters are roughly 10 orders of magnitude weaker than the predicted values of these parameters in the simplest version of the seesaw mechanism. It is therefore unlikely that collider experiments can probe this simple version even in the future with more statistics and upgraded particle accelerators. Nevertheless, these results provide important constraints on popular variants of the seesaw mechanism, which generally feature larger mixings. In fact, these variants will continue to be a target of opportunity for future collider experiments, as well as for studies that combine searches for lepton number violation and searches for sterile neutrinos.

While neutrino oscillation experiments are nearing the determination of all the oscillation parameters, understanding the underlying mechanism of neutrino mass generation requires searches beyond oscillation experiments. These neutrino mass studies are progressing on multiple fronts, but so far they have provided only constraints—rather than a discovery of the origin of neutrino masses.

References

- A. Tumasyan et al. (CMS Collaboration), “Probing heavy Majorana neutrinos and the Weinberg operator through vector boson fusion processes in proton-proton collisions at s√� = 13 TeV,” Phys. Rev. Lett. 131, 011803 (2023).

- P. Minkowski, “μ→eγ𝜇→�𝛾 at a rate of one out of 109 muon decays?” Phys. Lett. B 67 (1977).

- P. Ramond, “The family group in grand unified theories,” (1979), arXiv:hep-ph/9809459.

- M. Gell-Mann et al., “Complex spinors and unified theories,” (1979), Conf. Proc. C 790927, arXiv:1306.4669.

- T. Yanagida, “Horizontal gauge symmetry and masses of neutrinos,” Conf. Proc. C 7902131 (1979) https://inspirehep.net/literature/143150.

- R. N. Mohapatra and G. Senjanović, “Neutrino mass and spontaneous parity nonconservation,” Phys. Rev. Lett. 44 (1980).

- J. Schechter and J. W. F. Valle, “Neutrino masses in SU(2) ⊗⊗ U(1) theories,” Phys. Rev. D 22 (1980).

- S. Abe et al. (KamLAND-Zen Collaboration), “First search for the Majorana nature of neutrinos in the inverted mass ordering region with KamLAND-Zen,” (2022), arXiv:2203.02139.

- B. Fuks et al., “Probing the Weinberg operator at colliders,” Phys. Rev. D 103 (2021).

- E. Fernandez-Martinez et al., “Global constraints on heavy neutrino mixing,” J. High Energy Phys. 2016 (2016).

Error Rate Reduced for Scalable Quantum Technology

Exciton Ensembles Manifest Coherence

Muon g-2 doubles down with latest measurement, explores uncharted territory in search of new physics

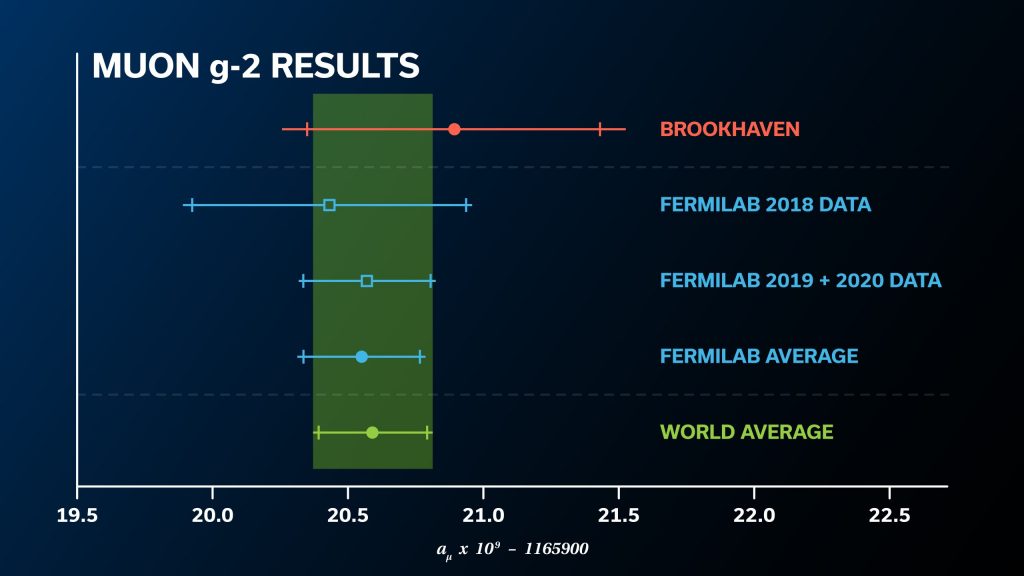

Physicists now have a brand-new measurement of a property of the muon called the anomalous magnetic moment that improves the precision of their previous result by a factor of 2.

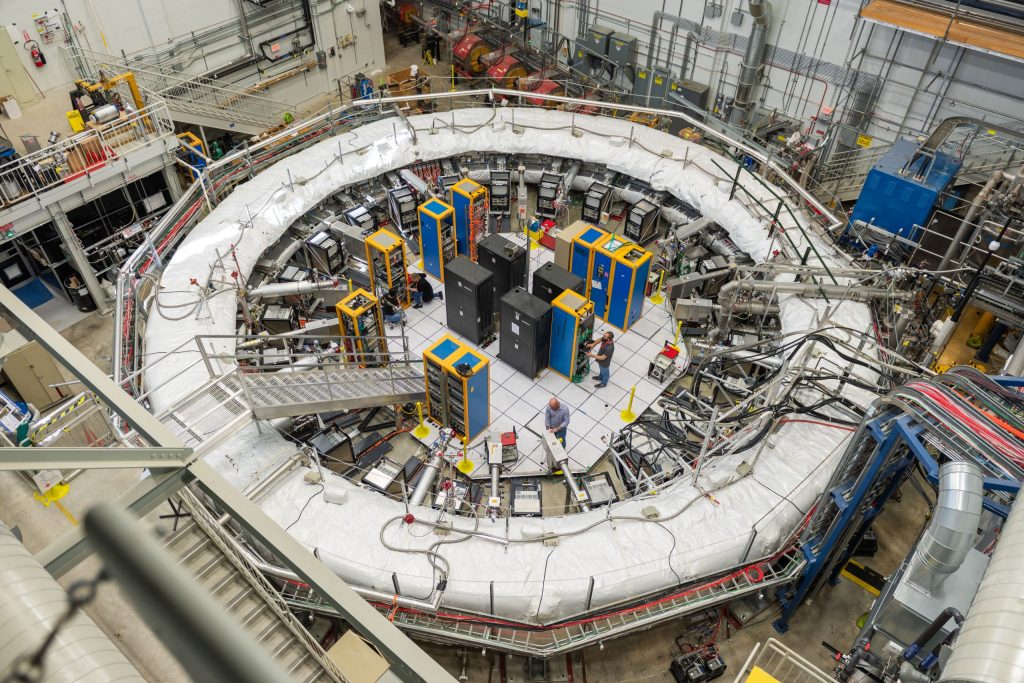

An international collaboration of scientists working on the Muon g-2 experiment at the U.S. Department of Energy’s Fermi National Accelerator Laboratory announced the much-anticipated updated measurement on Aug. 10. This new value bolsters the first result they announced in April 2021 and sets up a showdown between theory and experiment over 20 years in the making.

“We’re really probing new territory. We’re determining the muon magnetic moment at a better precision than it has ever been seen before,” said Brendan Casey, a senior scientist at Fermilab who has worked on the Muon g-2 experiment since 2008.

The announcement on Aug. 10, 2023, is the second result from the experiment at Fermilab, which is twice as precise than the first result announced on April 7, 2021. Photo: Ryan Postel, Fermilab

Physicists describe how the universe works at its most fundamental level with a theory known as the Standard Model. By making predictions based on the Standard Model and comparing them to experimental results, physicists can discern whether the theory is complete — or if there is physics beyond the Standard Model.

Muons are fundamental particles that are similar to electrons but about 200 times as massive. Like electrons, muons have a tiny internal magnet that, in the presence of a magnetic field, precesses or wobbles like the axis of a spinning top. The precession speed in a given magnetic field depends on the muon magnetic moment, typically represented by the letter g; at the simplest level, theory predicts that g should equal 2.

The difference of g from 2 — or g minus 2 — can be attributed to the muon’s interactions with particles in a quantum foam that surrounds it. These particles blink in and out of existence and, like subatomic “dance partners,” grab the muon’s “hand” and change the way the muon interacts with the magnetic field. The Standard Model incorporates all known “dance partner” particles and predicts how the quantum foam changes g. But there might be more. Physicists are excited about the possible existence of as-yet-undiscovered particles that contribute to the value of g-2 — and would open the window to exploring new physics.

The new experimental result, based on the first three years of data, announced by the Muon g-2 collaboration is:

g-2 = 0.00233184110 +/- 0.00000000043 (stat.) +/- 0.00000000019 (syst.)

The measurement of g-2 corresponds to a precision of 0.20 parts per million. The Muon g-2 collaboration describes the result in a paper that they submitted today to Physical Review Letters.

With this measurement, the collaboration has already reached their goal of decreasing one particular type of uncertainty: uncertainty caused by experimental imperfections, known as systematic uncertainties.

“This measurement is an incredible experimental achievement,” said Peter Winter, co-spokesperson for the Muon g-2 collaboration. “Getting the systematic uncertainty down to this level is a big deal and is something we didn’t expect to achieve so soon.”

Due to the large amount of additional data that is going into the 2023 analysis announcement, the Muon g-2 collaboration’s latest result is more than twice as precise as the first result announced in 2021. Image: Muon g-2 collaboration

While the total systematic uncertainty has already surpassed the design goal, the larger aspect of uncertainty — statistical uncertainty — is driven by the amount of data analyzed. The result announced today adds an additional two years of data to their first result. The Fermilab experiment will reach its ultimate statistical uncertainty once scientists incorporate all six years of data in their analysis, which the collaboration aims to complete in the next couple of years.

To make the measurement, the Muon g-2 collaboration repeatedly sent a beam of muons into a 50-foot-diameter superconducting magnetic storage ring, where they circulated about 1,000 times at nearly the speed of light. Detectors lining the ring allowed scientists to determine how rapidly the muons were precessing. Physicists must also precisely measure the strength of the magnetic field to then determine the value of g-2.

The Fermilab experiment reused a storage ring originally built for the predecessor Muon g-2 experiment at DOE’s Brookhaven National Laboratory that concluded in 2001. In 2013, the collaboration transported the storage ring 3,200 miles from Long Island, New York, to Batavia, Illinois. Over the next four years, the collaboration assembled the experiment with improved techniques, instrumentation and simulations. The main goal of the Fermilab experiment is to reduce the uncertainty of g-2 by a factor of four compared to the Brookhaven result.

“Our new measurement is very exciting because it takes us well beyond Brookhaven’s sensitivity,” said Graziano Venanzoni, professor at the University of Liverpool affiliated with the Italian National Institute for Nuclear Physics, Pisa, and co-spokesperson of the Muon g-2 experiment at Fermilab.

In addition to the larger data set, this latest g-2 measurement is enhanced by updates to the Fermilab experiment itself. “We improved a lot of things between our first year of taking data and our second and third year,” said Casey, who recently finished his term as co-spokesperson with Venanzoni. “We were constantly making the experiment better.”

The experiment was “really firing on all cylinders” for the final three years of data-taking, which came to an end on July 9, 2023. That’s when the collaboration shut off the muon beam, concluding the experiment after six years of data collection. They reached the goal of collecting a data set that is more than 21 times the size of Brookhaven’s data set.

Physicists can calculate the effects of the known Standard Model “dance partners” on muon g-2 to incredible precision. The calculations consider the electromagnetic, weak nuclear and strong nuclear forces, including photons, electrons, quarks, gluons, neutrinos, W and Z bosons, and the Higgs boson. If the Standard Model is correct, this ultra-precise prediction should match the experimental measurement.

Calculating the Standard Model prediction for muon g-2 is very challenging. In 2020, the Muon g-2 Theory Initiative announced the best Standard Model prediction for muon g-2 available at that time. But a new experimental measurement of the data that feeds into the prediction and a new calculation based on a different theoretical approach — lattice gauge theory — are in tension with the 2020 calculation. Scientists of the Muon g-2 Theory Initiative aim to have a new, improved prediction available in the next couple of years that considers both theoretical approaches.

The Muon g-2 collaboration comprises close to 200 scientists from 33 institutions in seven countries and includes nearly 40 students so far who have received their doctorates based on their work on the experiment. Collaborators will now spend the next couple of years analyzing the final three years of data. “We expect another factor of two in precision when we finish,” said Venanzoni.

The collaboration anticipates releasing their final, most precise measurement of the muon magnetic moment in 2025 — setting up the ultimate showdown between Standard Model theory and experiment. Until then, physicists have a new and improved measurement of muon g-2 that is a significant step toward its final physics goal.

The Muon g-2 collaboration submitted this scientific paper for publication.

This seven-minute video provides additional information about muons and the new result by the Muon g-2 collaboration.

Here is the recording of the scientific seminar held on Aug. 10, 2023.

The Muon g-2 experiment is supported by the Department of Energy (US); National Science Foundation (US); Istituto Nazionale di Fisica Nucleare (Italy); Science and Technology Facilities Council (UK); Royal Society (UK); European Union’s Horizon 2020; National Natural Science Foundation of China; MSIP, NRF and IBS-R017-D1 (Republic of Korea); and German Research Foundation (DFG).

Fermilab is America’s premier national laboratory for particle physics research. A U.S. Department of Energy Office of Science laboratory, Fermilab is located near Chicago, Illinois, and operated under contract by the Fermi Research Alliance LLC. Visit Fermilab’s website at https://www.fnal.gov and follow us on Twitter  Fermilab.

Fermilab.

The DOE Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://science.energy.gov.

Tagged: magnetic field, Muon g-2, Standard Model

:Reference:https://news.fnal.gov/2023/08/muon-g-2-doubles-down-with-latest-measurement/

The 50th anniversary of Apollo 11 lunar landing

The 50th anniversary of Apollo 11 lunar landing

The 50th anniversary of Apollo 11 lunar landing, titled as “Half a century since the first step” was held by Kharazmi University Astronomy Association. This event, which was one of the International Astronomical :union: (IAU) events, was held on July 2th in faculty of earth sciences, Kharazmi University, Tehran, Iran, sponsored by Nojum magazine and Espash space study center. The event was included two lectures and a space museum expo. The first lecturer was Cyrus Borzu, science journalist, who explained the history of the “Moon landing” project and the second lecturer was Aria Sabury, robotics researcher, who discussed about the future of man’s space journeys.